When most individuals take into consideration AI, they have an inclination to deal with ChatGPT, in the identical method that search means Google. However there’s a big AI world outdoors of chatbots which is certainly price exploring.

One instance is the rising neighborhood of individuals utilizing AI models on their house pc, as an alternative of utilizing third-party cloud providers like OpenAI or Google Gemini.

However why would I do this, you ask. Properly for one factor you get to management each facet of the AI you’re utilizing. That ranges from the selection of mannequin, to the subject material you employ it for.

Every part runs fully offline with no web wanted, which suggests there isn’t any downside holding your stuff non-public. It additionally means you are able to do your AI work anytime, anyplace, even with out a WiFi connection.

There’s additionally the query of prices, which may mount up shortly should you use business subscription providers for bigger tasks. Through the use of your own native AI mannequin, you could have to put up with a much less highly effective AI, however the trade-off is that it prices pennies in electrical energy to run.

Lastly, Google has simply began operating promoting in its Gemini chatbots, and it’s possible that different AI suppliers will quickly comply with go well with. Intrusive advertisements once you’re attempting to get stuff accomplished can get boring very quick.

How to get began

There are three primary elements to getting your native AI up and operating. First you want the fitting kind of pc, second you’ll have to choose the fitting AI mannequin and lastly there’s the matter of putting in the fitting software program to run your mannequin. Oh, and you’ll additionally want a bit little bit of know-how to tie all of it collectively.

1. The pc

(Picture: © Pixabay)

Complete books may very well be written—and most likely will likely be—on choosing the proper pc to run your native AI. There’s an enormous variety of variables, however for a easy starter, solely three issues matter. Complete quantity of RAM, your CPU processing energy, and cupboard space.

The essential rule of thumb is it is best to use essentially the most highly effective pc you’ll be able to afford, as a result of AI is energy mad. Nevertheless it’s not solely in regards to the processor; simply as essential is the quantity of reminiscence (RAM) you’ve got put in.

The underside line – neglect about utilizing your previous Home windows XP machine, you’re going to want a contemporary pc with a minimum of 8GB of RAM, a good graphics card with a minimum of 6GB of VRAM, and sufficient cupboard space (ideally SSD) to maintain the models you’ll be utilizing. The working system is irrelevant; Home windows, Mac, or Linux are all nice.

The explanation this stuff matter is that the extra energy your pc has, the quicker the AI will run. With the fitting configuration, you’ll give you the chance to run higher, extra highly effective AI models at a quicker pace. That’s the distinction between watching an AI response stutter throughout the display one painful letter at a time, or entire paragraphs of textual content scrolling down in milliseconds.

It’s additionally the distinction between having the ability to run a half-decent AI mannequin that doesn’t hallucinate, and delivers skilled high quality solutions and outcomes, or operating a mannequin that responds like a village fool.

Fast Tip: For those who purchased your pc inside the final two to three years, chances are high you’ll be good to go, so long as you’ve got sufficient RAM, particularly if it’s a gaming pc.

2. The mannequin

(Picture: © Freepik)

AI mannequin improvement is shifting so quick this part will likely be outdated by subsequent week. And I’m not joking. However for now, listed below are my suggestions.

The primary rule is to match the dimensions of your chosen mannequin to the capability or capabilities of your pc. So when you’ve got the naked minimal RAM and a weak graphics card, you’ll want to choose a smaller, much less highly effective AI mannequin, and vice versa.

Fast tip: all the time begin with a smaller mannequin than your RAM measurement and see how that works. So for instance when you’ve got 8GB of RAM, choose a mannequin which is round 4 to 5 GB in measurement. Using trial and error is a good suggestion at this level

The excellent news is extra and extra open supply models are coming onto the market that are completely tailor-made for modest computer systems. What’s extra, they carry out very well, in some circumstances in addition to cloud-based options. And this example is simply going to get higher because the AI market matures.

Three of my private favourite models proper now are Qwen3, Deepseek and Llama. These are all free and open supply to totally different levels, however solely the primary two can be utilized for business functions. You will discover an inventory of all of the out there open supply AI models on HuggingFace. There are actually hundreds of them in all sizes and capabilities, which may make it exhausting to choose the fitting model – which is why the following part is essential.

3. The software program

(Picture: © NPowell)

There are a variety of nice apps available on the market, known as wrappers or entrance ends, which make it simple to run the AI models. You simply want to obtain and set up the software program, choose the mannequin you need to use, and off you go. Normally, the app will warn you if the mannequin you’ve chosen gained’t run correctly on your pc, which makes the entire thing loads much less painful. Listed here are a few my private favorites.

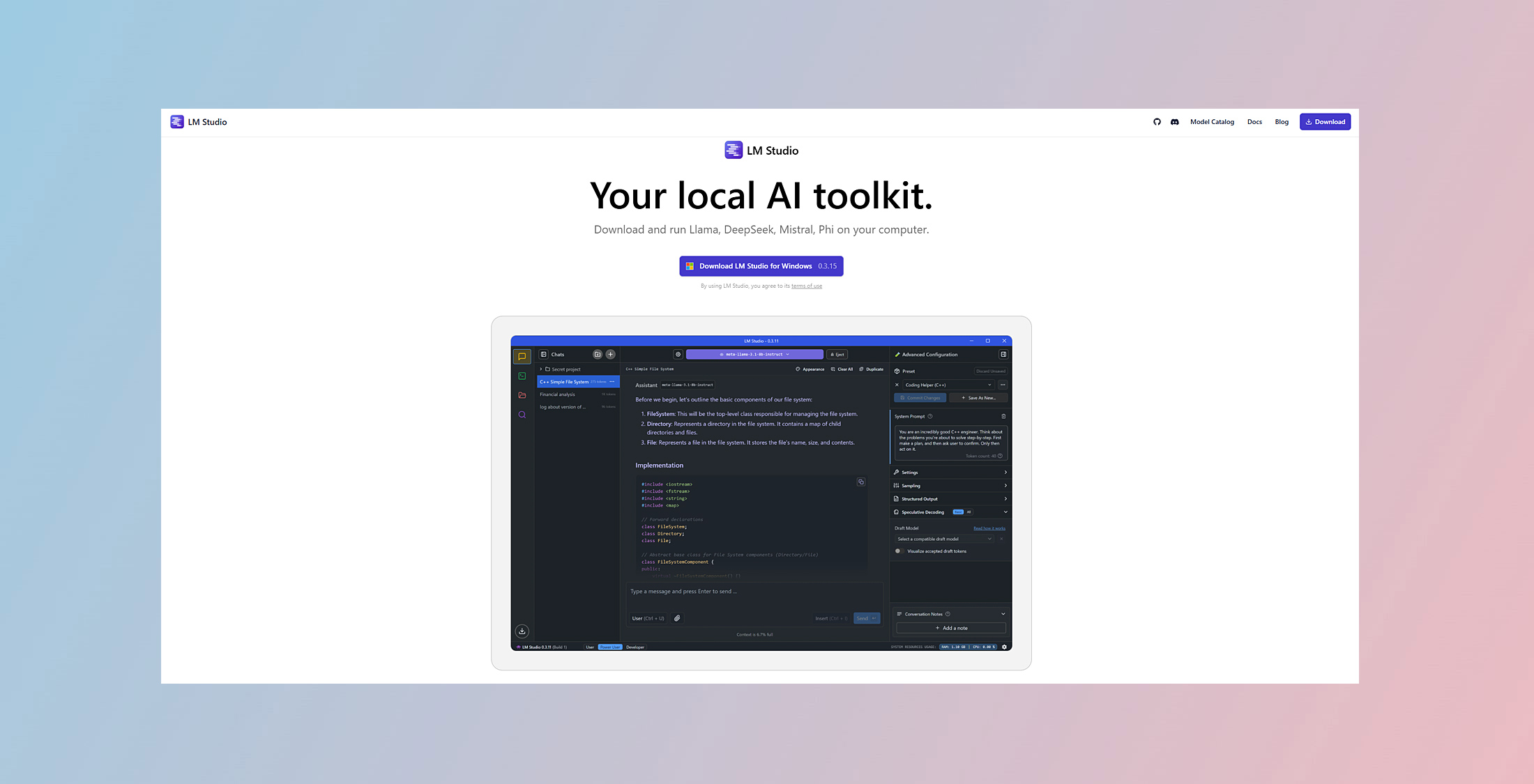

LM Studio

LM Studio is a free proprietary app that’s a simple method to use models on your pc. It runs on Home windows, Mac and Linux, and as soon as put in, you’ll be able to immediately add a mannequin by doing a search and selecting the right measurement. To do that, click on on the uncover icon within the left sidebar, and you will notice a mannequin search web page. This scans HuggingFace for appropriate models and offers you tons of nice info to make the fitting selection. It is a very useful gizmo.

PageAssist

My private favourite free open-source device for operating native models is PageAssist. It is a internet browser extension which makes it simple to entry any Ollama AI models on your machine. You will have to set up the free Ollama program first, and comply with the directions to obtain models, so this methodology requires a bit extra experience than LM Studio. However PageAssist is an outstanding method of operating native AI models on the press of a button, providing not simply chat, but additionally internet search and entry to your own private data base.

Ollama

Olama is quickly turning into the primary method to set up and run free open supply AI models on any small pc. It is a free mannequin server which integrates with a variety of third-party purposes, and can put native AI on a stage enjoying area with the large cloud providers.

Last Ideas

There’s no query that native AI models will develop in reputation as computer systems get extra highly effective, and the AI tech matures. Purposes reminiscent of private healthcare or finance, the place delicate information means privateness is vital, may even assist drive the adoption of those intelligent little offline assistants.

Small models are additionally more and more being utilized in distant operations reminiscent of agriculture or locations the place web entry is unreliable or unimaginable. Extra AI purposes are additionally migrating to our telephones and sensible units, particularly because the models get smaller and extra highly effective, and processors get beefier. The long run may very well be very fascinating certainly.

Extra from Tom’s Information

Again to Laptops